Automation Versus AI inside Go High Level

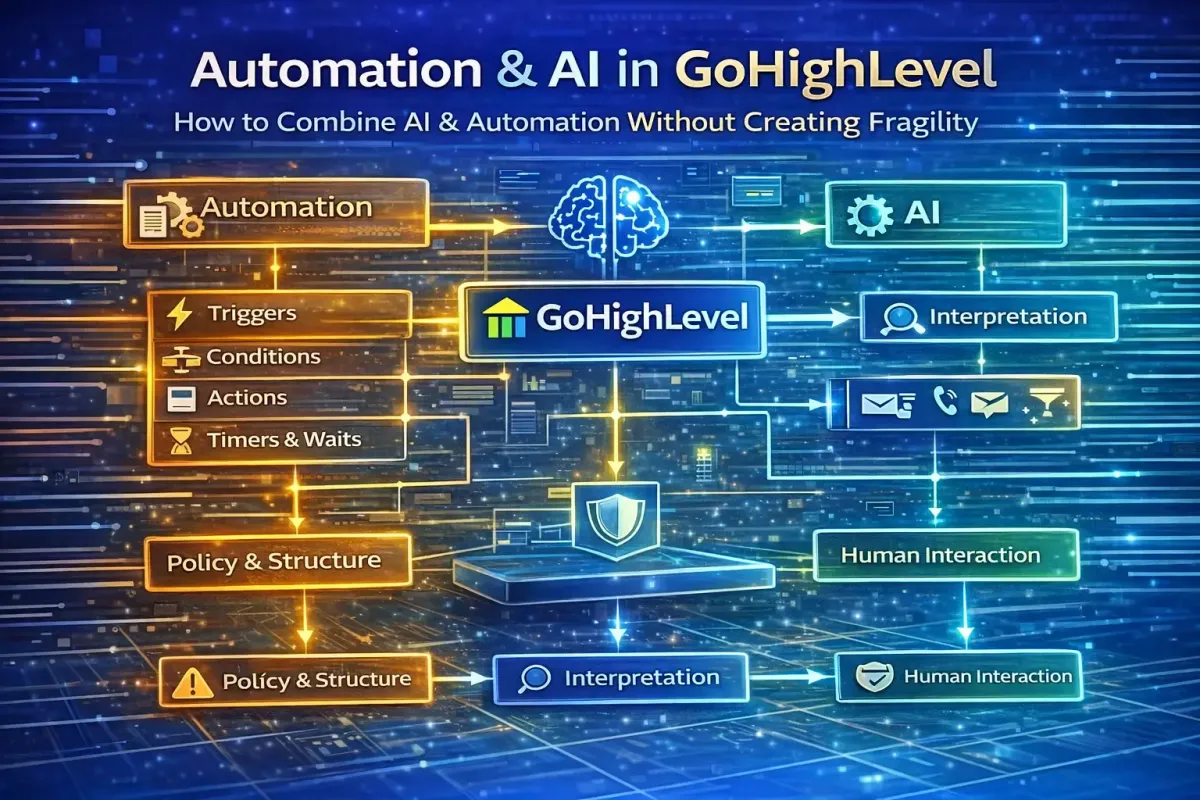

Go High Level (GHL) provides two distinct but complementary capabilities that are often misunderstood, misapplied, or incorrectly blended:

Automation: deterministic, rule-based execution of predefined business logic

AI: probabilistic, language-driven interpretation and generation

Most underperforming GHL accounts do not fail because they lack AI. They fail because their automation foundations are weak, inconsistent, or unmanaged. Conversely, many technically sophisticated accounts collapse under their own complexity because they attempt to use automation to replicate judgment—something it was never designed to do.

The highest-performing GHL implementations use a clear separation of responsibilities:

Automation for structure, compliance, and scale

AI for interpretation, adaptation, and human-like interaction

This guide explains the difference precisely, shows where most accounts go wrong, and outlines a future-proof architectural model for using automation and AI together—without fragility.

1. Automation vs AI: Two Fundamentally Different Systems

Before discussing features, pricing, or use cases, it is critical to understand that automation and AI solve different classes of problems.

Automation and AI are not competing technologies inside Go High Level. They are different layers of the same operating system.

Automation answers the question:

“What should happen when X occurs?”

AI answers a different question:

“What does this mean, and how should it be responded to?”

Confusing these roles is the root cause of most broken GHL accounts.

2. What “Automation” Means Inside Go High Level

Definition (GHL Context)

Automation in Go High Level refers to explicit, rule-based workflows that execute predefined actions when specific conditions are met.

Automation does not reason.

Automation does not interpret.

Automation executes.

If the same inputs are provided, the outcome is identical every time.

Core Automation Components in GHL

Automation inside GHL is constructed using a small number of deterministic building blocks:

Triggers initiate workflows when events occur, such as form submissions, tag additions, stage changes, or inbound messages

Conditions evaluate Boolean logic using if/else rules, filters, and comparisons

Actions perform predictable outcomes such as sending messages, moving pipeline stages, applying tags, or creating tasks

Timers and waits control sequencing, delays, and business-hour constraints

Branching logic routes contacts down predefined paths

These components form the infrastructure layer of the platform.

Examples of Pure Automation

Pure automation scenarios include:

When a form is submitted, move the contact to a defined pipeline stage

If no reply occurs within three days, send a reminder SMS

When a tag indicating high intent is applied, assign an owner

If an appointment is missed, enter a no-show recovery sequence

In each case, the behaviour is predictable, auditable, and repeatable.

Strengths of Automation

Automation excels at:

Predictability and consistency

Auditability and traceability

GDPR-safe execution and explainability

Scaling across tens of thousands of contacts

Enforcing compliance, timing rules, and ownership

Straightforward testing and debugging

Automation is infrastructure. Infrastructure must be reliable.

Limitations of Automation

Automation fails when asked to:

Interpret free-text responses

Handle ambiguity or nuance

Adapt to novel phrasing or emotional context

Scale decision trees beyond simple logic

Handle edge cases without exponential branching

Automation becomes brittle when over-extended. It is not intelligence—it is execution.

3. What “AI” Means Inside Go High Level

Definition (GHL Context)

AI in Go High Level refers primarily to large language model (LLM)-powered systems that interpret natural language, infer intent, and generate contextually appropriate responses.

AI does not reliably execute business logic.

AI interprets and responds probabilistically.

The same input can yield slightly different outputs depending on context.

Core AI Capabilities in GHL

AI features in GHL are designed to sit at the interaction layer, not the infrastructure layer. They include:

Conversational messaging across SMS, chat, and social platforms

Voice-based interaction and call handling

Natural language lead qualification

Content generation for emails, reviews, funnels, and summaries

Workflow assistance and automation guidance

AI excels where inputs are messy, human, or ambiguous.

Examples of Effective AI Usage

AI is well-suited to:

Interpreting why a lead is hesitant rather than simply that they are hesitant

Responding differently to pricing objections versus timing objections

Summarising long chat or call histories

Understanding unstructured enquiries

Handling conversational booking and rescheduling

AI absorbs variability that would otherwise explode automation complexity.

Strengths of AI

AI provides:

Flexibility in handling real-world language

Reduced manual conversation handling

Improved tone, empathy, and engagement

Graceful handling of edge cases

Faster response times at scale

AI is adaptive—but not deterministic.

Limitations of AI

AI introduces risk if misapplied:

Outputs are non-deterministic

Responses require strong prompt governance

Behaviour is harder to audit and explain

Errors compound if AI is allowed to execute logic directly

Compliance risk increases without constraints

AI must be guided. Unconstrained AI amplifies risk.

4. The Core Insight: Automation Enforces Policy, AI Handles Exceptions

This distinction is the most important principle in this guide.

Automation is best used to enforce what must happen.

AI is best used to interpret what is happening.

Trying to reverse these roles creates fragile systems.

5. Where Most Go High-Level Accounts Go Wrong

Failure Pattern One: AI Layered on Broken Automation

Common symptoms include:

Undefined or inconsistent pipelines

Inconsistent tagging

Multiple triggers firing unpredictably

No clear ownership model

AI does not fix chaos.

AI amplifies it.

Without a clean structure, AI outputs become noisy and unreliable.

Failure Pattern Two: Automation Attempting to Replace Judgment

This typically manifests as:

Twenty-branch workflows to handle objections

Over-engineered decision trees

Hundreds of tags and conditional paths

Workflow sprawl that no one can maintain

This creates technical debt and brittleness.

Automation cannot reason—it can only route.

Failure Pattern Three: AI Given Too Much Authority

High-risk patterns include:

AI moving pipeline stages autonomously

AI sending pricing or contractual terms

AI closing loops without validation

This introduces compliance, reputational, and commercial risk.

6. The Correct Architectural Model

Principle: Automation Owns State, AI Owns Interpretation

The most resilient GHL architectures follow a layered model.

Layer One: Data and Structure (Automation)

This layer defines truth and state:

Pipelines and stages

Tags and classifications

Ownership rules

Timing constraints

SLA enforcement

This layer must be deterministic and stable.

Layer Two: Interpretation (AI)

This layer interprets human input:

Message intent classification

Objection detection

Urgency assessment

Context summarisation

AI informs the system—it does not control it.

Layer Three: Execution (Automation)

Automation resumes control:

Routing leads

Triggering sequences

Assigning tasks

Escalating to humans

AI classifies.

Automation executes.

7. Practical Use Cases Done Correctly

Lead Reply Handling

First, automation captures the inbound message and metadata.

Next, AI interprets intent—such as pricing enquiry, objection, readiness to book, or general question.

Finally, automation routes the lead into the correct workflow, calendar flow, or human escalation.

The system remains auditable, scalable, and compliant.

No-Show Management

Automation detects the no-show event.

AI generates an empathetic, brand-aligned follow-up message.

Automation enforces timing, retries, and escalation logic.

Tone is flexible.

Logic remains fixed.

Reputation Management

Automation monitors for new reviews.

AI drafts a context-appropriate response.

Automation posts the reply and logs it in the CRM.

Consistency is maintained without manual effort.

8. Governance, Risk, and Compliance

Automation Governance

Strong automation governance includes:

Versioned workflows

Documented triggers and outcomes

Minimal branching

Clear ownership and responsibility

Automation should be boring.

Boring is good.

AI Governance

AI governance requires:

Prompt version control

Defined scope of authority

Human override paths

Logging and review

A useful rule of thumb:

AI can speak. Automation decides.

9. Measurement and Optimisation

Automation Performance Metrics

Automation should be evaluated using:

Lead response time

Stage progression rates

Drop-off points

SLA adherence

These are operational metrics.

AI Performance Metrics

AI should be evaluated on:

Conversation resolution rate

Human handoff frequency

Booking completion post-interaction

Sentiment and response quality

AI must always be measured in the context of automation outcomes.

10. Forward-Looking View: 2026 and Beyond

As Go High Level continues expanding its native AI capabilities:

Automation discipline becomes more important, not less

Clean data architecture becomes a competitive advantage

AI increasingly recommends rather than decides

Agencies with governance outperform AI-heavy but structurally weak competitors

AI accelerates systems.

It does not replace systems.

Final Takeaway

Automation and AI are not competitors inside Go High Level.

They are different layers of the same operating model.

Automation provides reliability, compliance, and scale

AI provides interpretation, adaptability, and human-like interaction

When combined intentionally:

Automation prevents chaos

AI absorbs complexity

The system scales without becoming fragile

The future of high-performing GHL accounts is not more AI.

It is better automation, augmented by AI in precisely the right places.