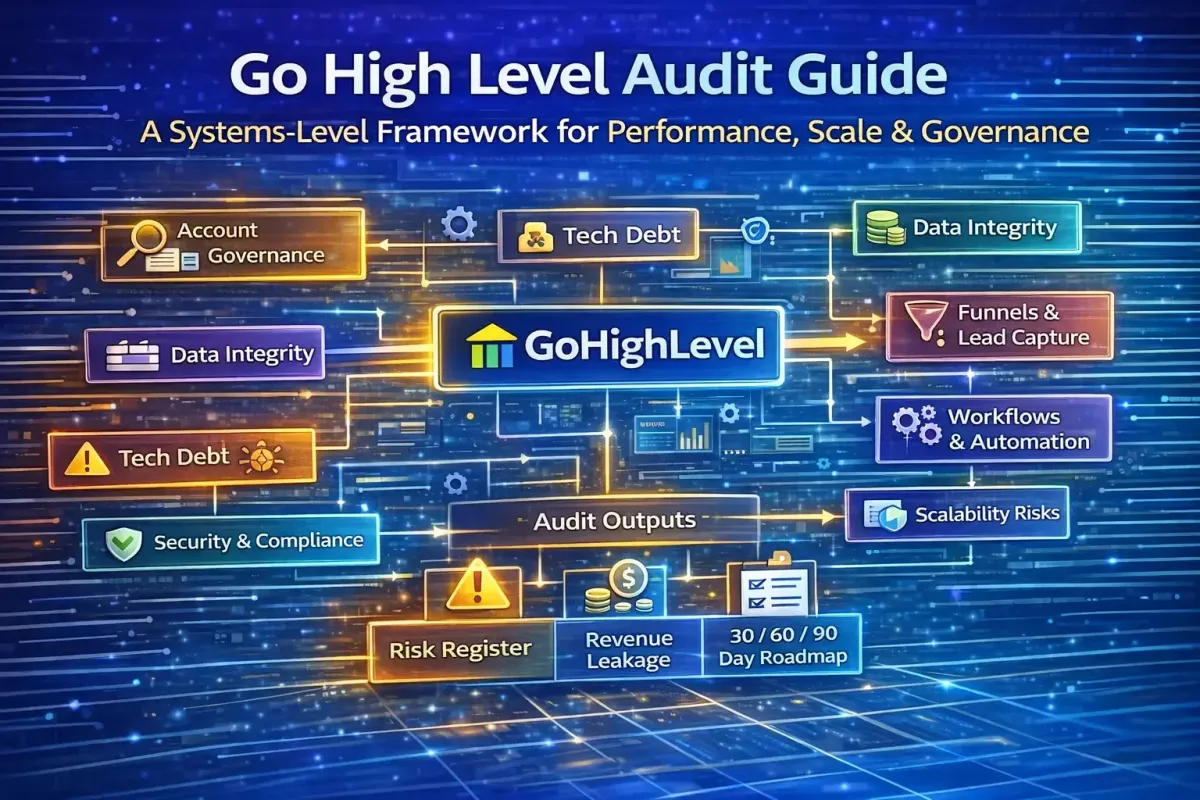

The Ultimate GoHighLevel Audit Guide

A Go High Level (GHL) audit is not a surface-level checklist, a feature walkthrough, or a critique of individual funnels in isolation. When performed correctly, it is a structured systems review of how an account is architected, how data flows through it, how automation behaves under real conditions, and whether the platform is genuinely supporting revenue growth—or quietly undermining it.

Most underperforming Go High Level accounts do not fail because the platform lacks capability. They fail because of poor operating models, fragmented data structures, unmanaged automation debt, and weak governance. These issues compound over time, creating “leaky buckets” where leads stall, tracking breaks, reporting becomes unreliable, and teams lose trust in the CRM.

This guide presents a repeatable, agency-grade audit framework designed to identify technical risk, revenue leakage, and scalability constraints—then translate findings into a prioritised improvement roadmap. It is written from a RevOps, automation, and scale perspective, suitable for internal audits or productised client services.

What a Go High Level Audit Actually Is (and Is Not)

A professional GHL audit evaluates whether the platform is functioning as a revenue operating system, not just a marketing tool.

A Go High Level audit is not:

A list of toggles to turn on

A funnel design critique in isolation

A cosmetic review of dashboards

A “best practices” blog checklist

A Go High Level audit should answer five critical questions:

Is the account architected around a clear operating model?

Is the data clean, reliable, and decision-grade?

Do automations reduce friction—or hide it?

Can the system scale without compounding risk?

Does reporting reflect commercial truth rather than vanity metrics?

Audit Outputs: What You Should Deliver

A proper audit must produce tangible, decision-ready outputs. At minimum:

Risk register: what will break, when, and why

Efficiency gaps: duplication, manual work, latency

Automation debt map: fragile logic vs scalable logic

Data integrity scorecard

Prioritised remediation roadmap (30 / 60 / 90 days)

For agencies, this is what transforms an audit from a technical exercise into a commercial advisory product.

Audit Structure Overview

This framework is organised into ten core sections:

Account Architecture & Governance

Data Model & Contact Integrity

Pipeline & Lifecycle Architecture

Funnels, Websites & Lead Capture

Workflow & Automation Logic

Communications & Deliverability

AI, Attribution & Conversion Tracking

Reporting & Decision Support

Security, Compliance & Access Control

Scalability & Future-Proofing

Each section covers:

What to inspect

Common failure patterns

What “good” looks like

Corrective actions

1. Account Architecture & Governance

What to Audit

Agency vs location separation

Snapshot inheritance and usage

Naming conventions (pipelines, workflows, tags, fields)

User roles and permission boundaries

Common Failure Patterns

No documented ownership model

Shared admin access across staff

Inconsistent naming (e.g.

New Lead,new-lead,lead_new)Workflows built directly in production with no versioning

What “Good” Looks Like

Single accountable account owner

Role-based access aligned to function (Sales ≠ Marketing ≠ Ops)

Clear environment discipline: build → test → deploy

Version-controlled, documented snapshots

Corrective Actions

Define ownership per system domain (data, automation, reporting)

Lock admin access to senior operators only

Enforce naming conventions platform-wide

Introduce snapshot versioning and change logs

2. Data Model & Contact Integrity

What to Audit

Custom fields: naming, types, usage frequency

Tags vs fields vs opportunities

Source and attribution fields

Duplicate contact logic

Common Failure Patterns

Tags used as permanent data storage

Multiple fields for the same concept (Budget, Monthly Budget, Spend)

Free-text fields where controlled enums are required

No deduplication rules

What “Good” Looks Like

Fields = data, Tags = state

Single source of truth per data point

Dropdowns, radios, and validation for key inputs

Automated deduplication logic

Corrective Actions

Rationalise custom fields into a controlled schema

Migrate “data tags” into proper fields

Implement phone/email-based dedupe workflows

Standardise source tracking across all capture points

3. Pipeline & Lifecycle Architecture

What to Audit

Number of pipelines and their purpose

Stage definitions and semantics

Stage-based automation triggers

Revenue attribution logic

Common Failure Patterns

Pipelines reflecting internal teams rather than customer lifecycle

Stages with no entry/exit criteria

Manual stage changes with no automation

Revenue inconsistently recorded

What “Good” Looks Like

One pipeline per business state

Clear lifecycle semantics (Lead → MQL → SQL → Won/Lost)

Stage changes trigger automation and reporting

Revenue tied to opportunities, not contacts

Corrective Actions

Redesign pipelines around lifecycle, not org chart

Define acceptance criteria per stage

Automate stage progression where possible

Enforce mandatory opportunity values

4. Funnels, Websites & Lead Capture

What to Audit

Funnel inventory (live vs legacy vs test)

Step paths and URLs

Form and survey field mapping

Thank-you page logic

Common Failure Patterns

Auto-generated URLs (

/home-1)Forms creating partial or duplicate contacts

Thank-you pages without automation triggers

No source or UTM capture

What “Good” Looks Like

Clean, intentional URLs

Explicit field mapping on every form

Every submission triggers lifecycle logic

First-party tracking embedded at capture

Corrective Actions

Rename and rationalise all live funnel paths

Standardise form templates

Ensure every conversion fires a tracking event

Implement consistent UTM capture

5. Workflow & Automation Logic (Critical Section)

This is where most value—and most risk—lives.

What to Audit

Trigger logic and entry conditions

Branching depth and complexity

Stop rules, suppressions, and re-entry logic

Error logs and execution failures

Common Failure Patterns

Multiple workflows firing on the same trigger

No “Stop on Response” in nurtures

Time delays masking weak logic

AI steps acting on incomplete data

What “Good” Looks Like

One trigger = one responsibility

Event-driven logic over time delays

Explicit exits and suppressions

AI only after data validation

Rule of thumb: If you cannot diagram a workflow on one page, it is too complex. Combing too many functions into one workflow is a single point of failure if it stops working for what ever reason.

Corrective Actions

Consolidate overlapping workflows

Introduce naming and documentation standards

Add explicit stop and exit conditions

Refactor time-based logic into event-based logic

6. Communications & Deliverability

What to Audit

Email domain authentication (SPF, DKIM, DMARC)

SMS sender IDs and routing

Message frequency and escalation logic

Consent and opt-out handling

Common Failure Patterns

No authenticated sending domain

Same message copied across channels

No quiet hours or throttling

Over-automation without human oversight

What “Good” Looks Like

Channel-appropriate messaging

Behaviour-based escalation

Deliverability monitoring

Human-in-the-loop safeguards

Corrective Actions

Authenticate all sending domains

Rewrite templates per channel

Introduce frequency caps and quiet hours

Audit consent capture points

7. AI, Attribution & Conversion Tracking

What to Audit

Where AI is used and why

Input data quality

Offline conversion tracking

CRM ↔ Ads feedback loops

Common Failure Patterns

AI acting on junk or incomplete data

No attribution hierarchy

Google Ads optimised on low-quality leads

No closed-loop reporting

What “Good” Looks Like

AI amplifies clean systems only

Offline conversions mapped to revenue stages

Ads optimised on outcomes, not clicks

CRM as the attribution authority

Corrective Actions

Gate AI actions behind data validation

Implement offline conversion tracking

Align CRM stages with ad conversions

Remove vanity conversions from ad platforms

8. Reporting & Decision Support

What to Audit

KPI definitions and ownership

Dashboard accuracy

Lag between action and insight

Metric consistency

Common Failure Patterns

Vanity metrics only (opens, clicks)

Manual reporting exports

Conflicting numbers across dashboards

No revenue attribution

What “Good” Looks Like

One owner per KPI

Lifecycle-based reporting

Automated dashboards

Revenue-first measurement

Corrective Actions

Define a core KPI set

Rebuild dashboards around decisions, not data

Eliminate redundant reports

Tie reporting directly to the pipeline and revenue

9. Security, Compliance & Access Control

What to Audit

User access levels

Consent tracking

Data retention policies

Audit logs

Common Failure Patterns

Everyone is admin

No consent fields

No data deletion process

Shared logins

What “Good” Looks Like

Least-privilege access

Explicit consent capture

GDPR-aligned retention policies

Regular access audits

Corrective Actions

Revoke unnecessary access

Implement consent fields and workflows

Define data retention rules

Schedule quarterly access reviews

10. Scalability & Future-Proofing

What to Audit

Snapshot portability

Workflow modularity

Documentation quality

Team onboarding friction

Common Failure Patterns

Hard-coded values everywhere

Client-specific logic embedded globally

No documentation

No testing framework

What “Good” Looks Like

Modular, reusable workflows

Snapshot-first mindset

Documented SOPs

Measured automation ROI

Corrective Actions

Refactor workflows into reusable modules

Externalise variables where possible

Document all core systems

Introduce testing and review cycles

Prioritisation Framework (Recommended)

Score each issue on:

Impact (revenue or risk)

Effort

Urgency

Then group into:

Tier 1 (Next 7–14 Days)

Critical fixes: broken flows, tracking failures, security risks.

Tier 2 (Next 30–60 Days)

Structural improvements: pipeline redesign, workflow refactoring, dashboards.

Tier 3 (Next 90+ Days)

Optimisation and scaling: experimentation, advanced segmentation, AI expansion.

Each item must have:

Owner

Deadline

Success metric

Strategic Conclusion

Go High Level is not limited by features. It is limited by intentional design.

High-performing GHL accounts share the same traits: disciplined architecture, clean data, explicit ownership, and automation that supports real business processes rather than compensating for weak ones.

AI will only amplify whatever foundation it is placed on. Clean logic scales. Messy logic compounds risk.

A strong audit does not just identify what is broken—it clarifies what the system is optimised for. When performed correctly, a Go High Level audit becomes a strategic lever for performance, governance, and sustainable scale—not just a technical exercise.